Syncing Notion databases into Tinybird using notion-objects

Learn how to synchronize data from Notion databases into Tinybird data sources with just a few lines of Python code with the notion-objects library.

In this blog post, I want to show you how you can, with just a few lines of Python code using the notion-objects library and the Tinybird API, synchronize data from Notion databases into Tinybird data sources.

I’ve become a big fan of both Notion and Tinybird. They are excellent products and I use them daily at LocalStack. We’ve been using Tinybird as a consolidated data warehouse for a while, and query it for all sorts of analytics. We’ve also been steadily building up data in various Notion databases, including our project roadmap or customer incident tracking, that we wanted to start running queries against. So it was clear we had to start syncing data from Notion into Tinybird. I spent some time last weekend to create a library to make it easier to get data out of Notion. We now use the library in scheduled AWS Lambda functions that do the synchronization. After reading this article, you should be able to build something similar.

Prerequisites

Requirements for this:

- Python 3

- A Notion account

- A Tinybird account and workspace

- A basic understanding of the three

Creating a Notion integration

I’m assuming you have a notion account and have some familiarity with it. To create an API token for Notion, we first need to create what Notion calls an integration, and connect the integration to the database we want to synchronize. The Getting Started guide in the Notion developer docs explains this very concisely.

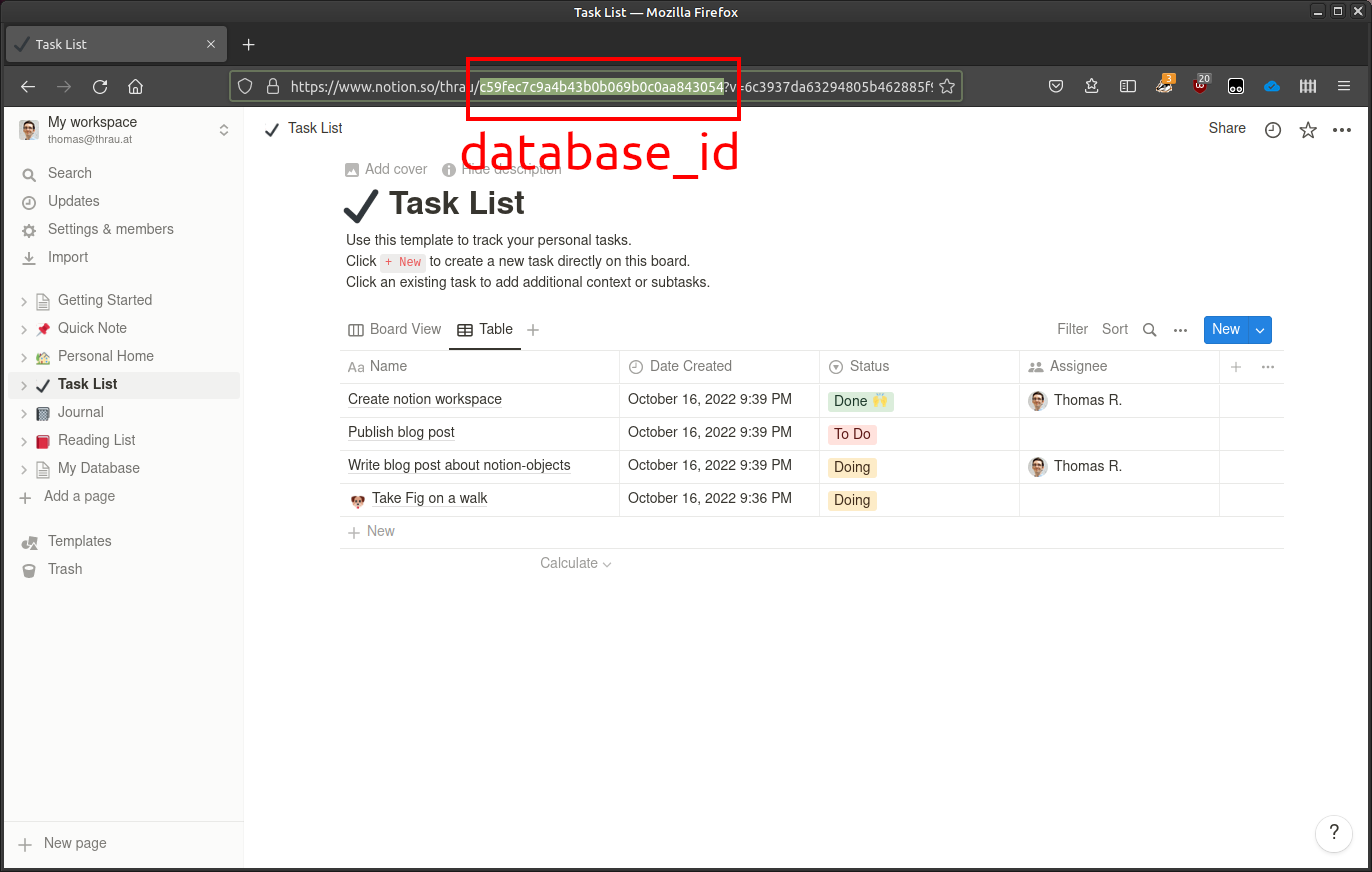

Here’s my notion database I use for demonstration. I also highlighted where to find the database id (needed later).

notion-objects

notion-objects is a data mapper for mapping Notion database records to Python objects. To connect to Notion, it uses notion-sdk-py, a Notion SDK for Python.

Simply install notion-objects using pip:

pip install notion-objects

With notion-objects, you can easily iterate over all records in a database,

and access Notion database fields like Python object attributes.

You can build your own data models and mappings, which is explained in the notion-objects README, but the default dynamic mapping works pretty well for basic use cases.

Here’s some example code.

import os

from notion_client import Client

from notion_objects import Database

def main():

notion_token = "" # notion integration token goes here

database_id = "" # add your database ID here

# create the notion client

client = Client(auth=notion_token)

# the notion-objects database

db = Database(database_id, client)

for record in db:

print(record.id, record.Name, record.Assignee, record.Status)

if __name__ == '__main__':

main()

For my database, this prints:

10289cdc-7987-4f2d-8f6d-be8ab7d4308e Create notion workspace ['Thomas R.'] Done 🙌

44d54963-c2ef-40de-80ef-d25fe6df444f Publish blog post [] To Do

8b8dd97e-2e7f-470a-b076-3958100293f6 Write blog post about notion-objects ['Thomas R.'] Doing

964da614-f41f-4930-aed6-ca50f10603ee Take Fig on a walk [] Doing

Record objects also have to_json and to_dict converters, that will later come in handy.

Getting data into Tinybird

The events endpoint of Tinybird is convenient because it accepts NDJSON and will also create the datasource if it does not exist. So all we need is a datasource name, an NDJSON payload, and a POST request to the events endpoint.

# build an ndjson batch that we later sync into tinybird

batch = []

for record in db:

# flat=True flattens date range properties into two columns

batch.append(record.to_json(flat=True))

data = "\n".join(batch)

data will now contain a new-line delimited JSON (NDJSON) payload, which we can post directly to the events endpoint:

# converts something like "My *fantastic* database" into "my__fantastic__database", which is a vald tinybird datasource name

tinybird_datasource = re.sub('[^0-9a-zA-Z]+', '_', db.title.strip()).lower()

# post the batch into tinybird and create the table if it doesn't exist

requests.post(

'https://api.tinybird.co/v0/events',

params={

'name': tinybird_datasource,

'token': tinybird_token,

},

data=data

)

Code so far

Let’s put together what we have so far:

import re

import requests

from notion_client import Client

from notion_objects import Database

def main():

notion_token = "secret_"

tinybird_token = "p."

database_id = "c59fec7c9a4b43b0b069b0c0aa843054"

# create the notion client

client = Client(auth=notion_token)

# the notion-objects database

db = Database(database_id, client)

# build an ndjson batch that we later sync into tinybird

batch = []

for record in db:

batch.append(record.to_json(flat=True))

data = "\n".join(batch)

# convert the title of the notion database into a valid tinybird datasource name

tinybird_datasource = re.sub('[^0-9a-zA-Z]+', '_', db.title.strip()).lower()

# post the batch into tinybird and create the table if it doesn't exist

requests.post(

'https://api.tinybird.co/v0/events',

params={

'name': tinybird_datasource,

'token': tinybird_token,

},

data=data

)

if __name__ == '__main__':

main()

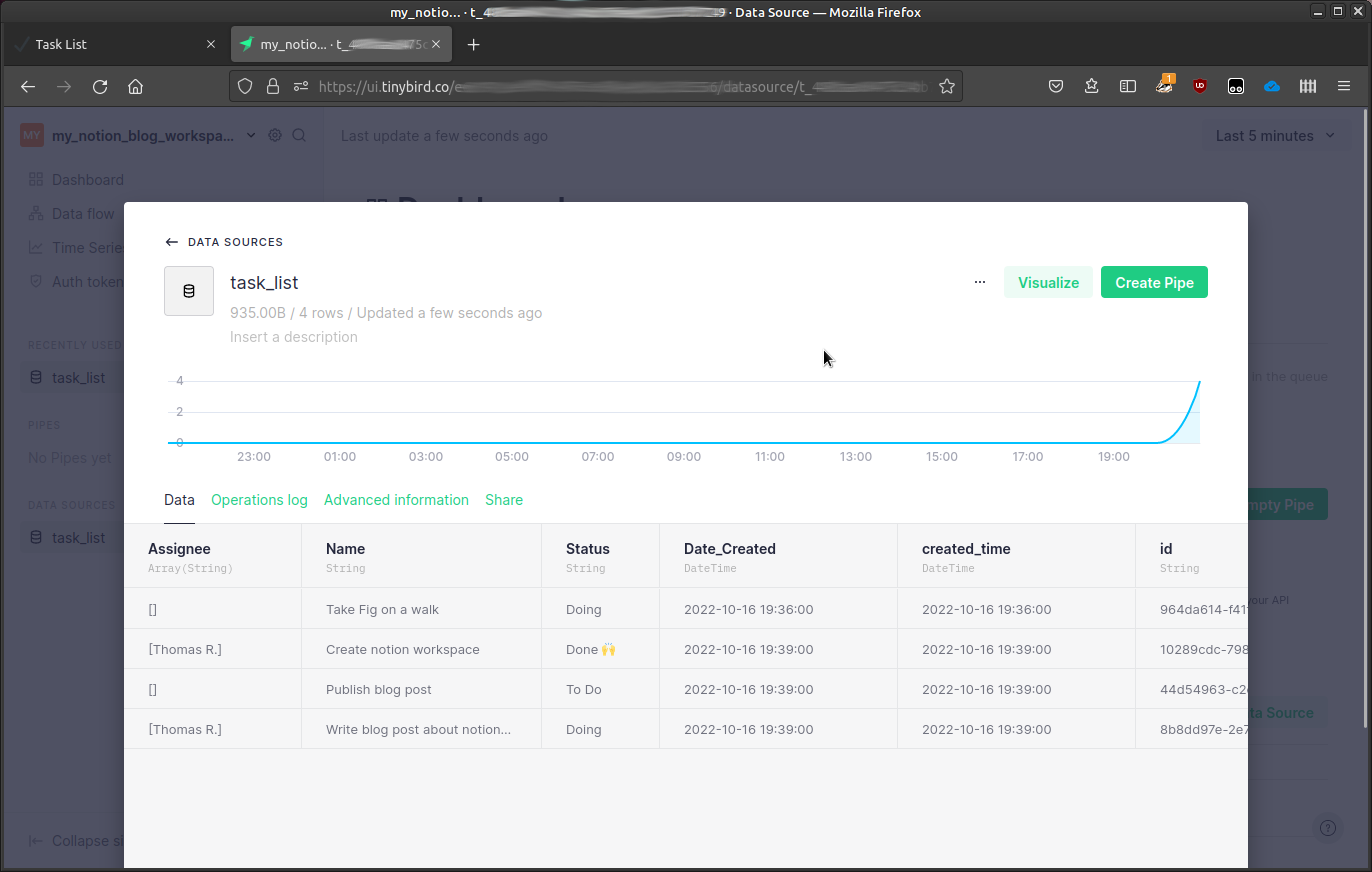

If you run this script, this is what you get in your Tinybird workspace:

Syncing changes

Next we want to deal with syncing data continuously. Since Notion currently does not provide webhooks to react to changes, we need to run a scheduled synchronization job. What would this job do? One way would be to truncate the datasource, and then run the full insert every time.

Alternatively, if we want to keep track of changes, we could only insert those notion objects that have changed since the last update.

Get the time of the latest synced change

notion-objects creates a default column last_edited_time that maps to the notion page’s last edit timestamp.

We could first query this from the the table using the Tinybird SQL endpoint GET /v0/sql.

r = requests.get(

f'https://api.tinybird.co/v0/sql',

params={

'q': f"SELECT max(last_edited_time) FROM {tinybird_datasource}",

'token': tinybird_token,

}

)

print(r.text) # 2022-10-16 19:36:00

An alternative could be to use the datasource’s operations log, but this is out of scope of this blog post.

Build a dynamic notion query filter

Now we want to fetch only those notion objects from the database that have been edited since the last time the data was synced. To that end, we dynamically build a notion query filter, based on the input of the previous query. If the database doesn’t exist yet, the Tinybird SQL query may fail, so we guard against that case.

if not r.ok or not r.text.strip():

# probably the table was not found, so we just use None

query = {}

else:

# build a query that fetches only those pages that were edited

# since the last time the database was synced

query = {

"filter": {

"timestamp": "last_edited_time",

"last_edited_time": {"after": r.text.strip()},

},

}

Now you can use notion-objects Database.query method to iterate over the result:

batch = []

for record in db.query(query):

batch.append(record.to_json(flat=True))

if not batch:

print("batch is empty, probably no data was edited")

return

data = "\n".join(batch)

De-duplicating data

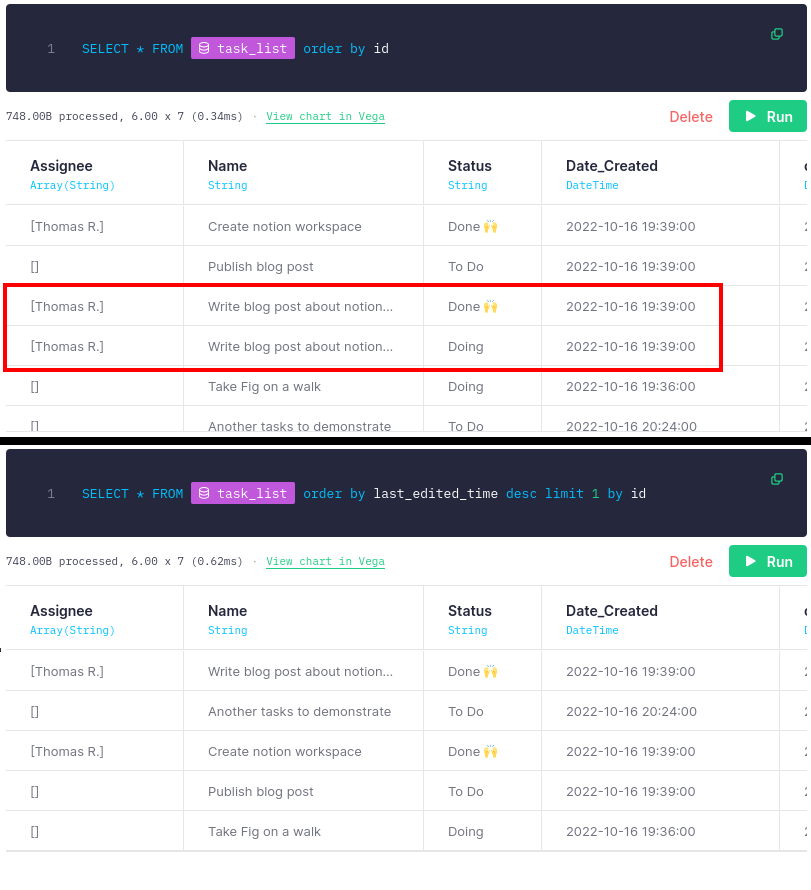

Obviously we now have records with the same ID multiple times. Once for every sync if it has changed since the last sync. There are several ways you can deal with duplicate data in Tinybird. I recommend checking out the Tinybird guide on de-duplication strategies for the full picture.

A simple query that only returns the latest items can look like this:

SELECT * FROM task_list

ORDER BY last_edited_time DESC

LIMIT 1 BY id

Here’s the effect in Tinybird:

Final Python program

Here’s the complete Python program

import re

import requests

from notion_client import Client

from notion_objects import Database

def main():

notion_token = "secret_"

tinybird_token = "p."

database_id = ""

# create the notion client

client = Client(auth=notion_token)

# the notion-objects database

db = Database(database_id, client)

# convert the title of the notion database into a valid tinybird datasource name

tinybird_datasource = re.sub('[^0-9a-zA-Z]+', '_', db.title.strip()).lower()

r = requests.get(

f'https://api.tinybird.co/v0/sql',

params={

'q': f"SELECT max(last_edited_time) FROM {tinybird_datasource}",

'token': tinybird_token,

}

)

if not r.ok or not r.text.strip():

# probably the table was not found, so we just use an empty filter

query = {}

else:

# build a query that fetches only those pages that were edited

# since the last time the database was synced

query = {

"filter": {

"timestamp": "last_edited_time",

"last_edited_time": {"after": r.text.strip()},

},

}

# build an ndjson batch that we later sync into tinybird

batch = []

for record in db.query(query):

batch.append(record.to_json(flat=True))

if not batch:

print("batch is empty, probably no data was edited")

return

data = "\n".join(batch)

# post the batch into tinybird and create the table if it doesn't exist

r = requests.post(

'https://api.tinybird.co/v0/events',

params={

'name': tinybird_datasource,

'token': tinybird_token,

},

data=data

)

print(r.status_code)

print(r.text)

if __name__ == '__main__':

main()

Final remarks

Ideally, Tinybird partners up with Notion to provide this as a connector. Until then, we’ll need our own serverless solutions to operationalize this.

We use similar code at LocalStack in AWS Lambda functions that do the synchronization on a schedule. You could easily package this as a Lambda function and just deploy it to AWS to do the synchronization when the lambda is invoked. In fact, when I developed the deployment, I tested my Lambda function on LocalStack locally first. Look out for a follow-up on our LocalStack blog explaining how that works.

notion-objects is still under development and the API may change drastically! The next iteration will be allowing update operations on Notion objects (already works for simple properties in notion-objects>=0.4.0), to write back into Notion databases.

What we haven’t covered so far are page deletions. With the current script, we cannot remove pages from the Tinybird database - it’s append only.

Stay tuned for more!